Osakabe

‘Osakabe’ is a first person horror game created as a year 2 project on Breda University of applied sciences. The player is tasked with escaping the Japanese castle whilst trying to stay clear of the Osakabe. The game/enemy is completely AI driven with the exception of a single lightning strike.

- Concepting, implementing and iterating the Osakabe AI.

- Concepting, implementing and iterating spirit Fox NPC.

My Responsibilities

- Concepting, prototyping, implementing and playtesting Oskabe AI & NPC design.

- Lead Designer

Technical Breakdown

Click the link below to download the technical breakdown I wrote, including visuals, on how AI’s are implemented!

PD_EnemyAI_SK

On This Page

Design Process

Prototyping

A main purpose of the prototyping phase was to bridge the gap between the tactical stealth with lots of player agency and the atmospheric horror ‘walking simulators’. For example, instead of a security camera with UI showing a view cone, an entity swings from the ceiling with tentacles swinging around.

Concept (Proposal)

After converging all the work a ‘finalized’ concept was formed. At this stage the proposal pitch was created and the creative vision and game loops were defined.

Pre-Production

During pre-production the concept was planned out and design documents were created. The necessary AI systems needed to create content during production were then developed.

Production (Release)

The functional AI at the start of the production phase was iterated upon to create the shipped version. Details of this are described below.

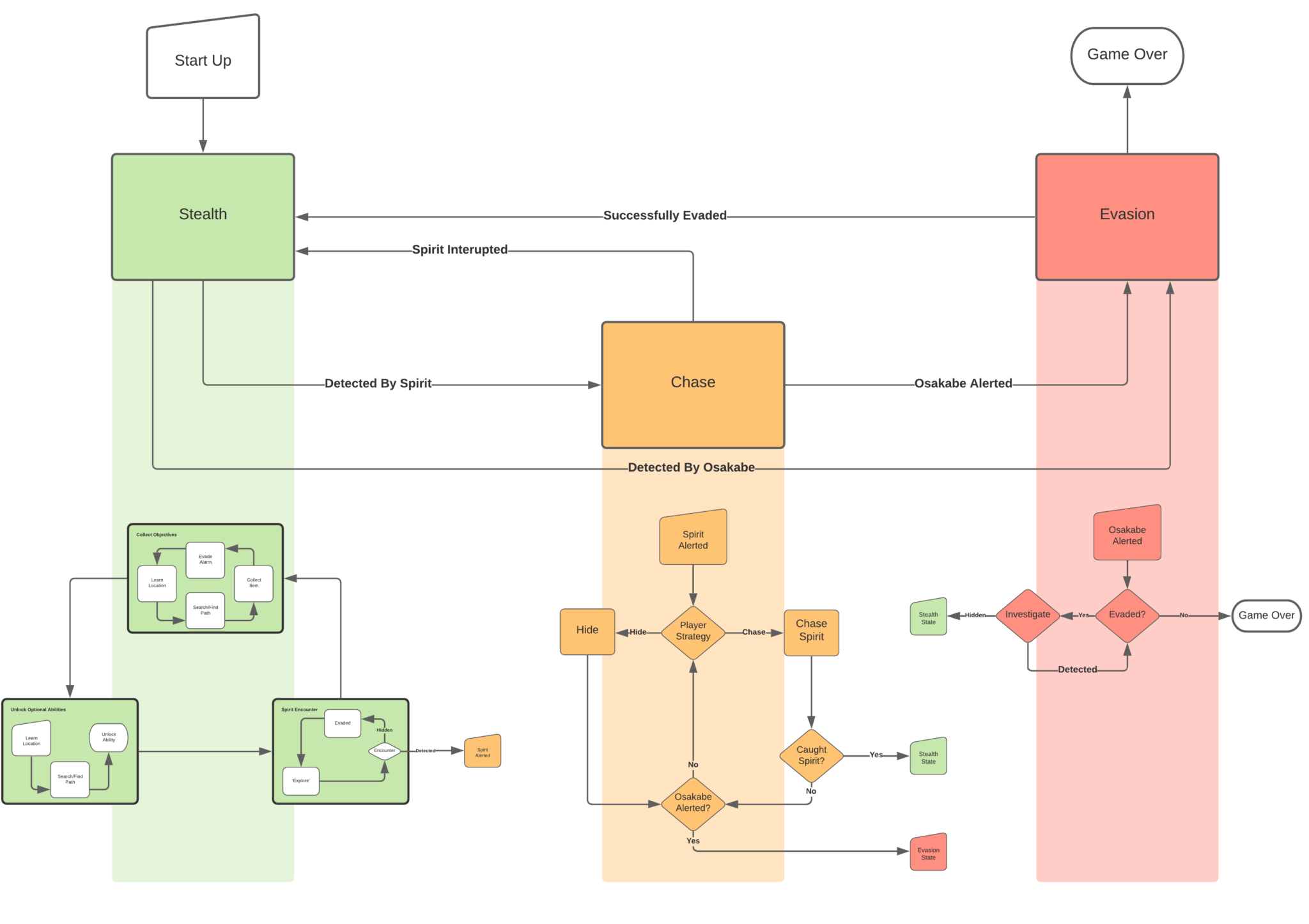

Osakabe AI Behaviour

As we set out to create an AI driven horror experience, not relying on scripted jump scares, and had games such as Alien: Isolation as references you can imagine AI behaviour being a big deal.

The design intention was to create a horror experience driven by the monster’s AI. Fear should come from player agency and not from an unavoidable jump scare behind a blind corner. The player should at all times be aware that danger comes from their decisions/mistakes and that the thing they fear has consequences that could lead to a failure state (unlike a scripted scare which lacks consequences as it is the intended path).

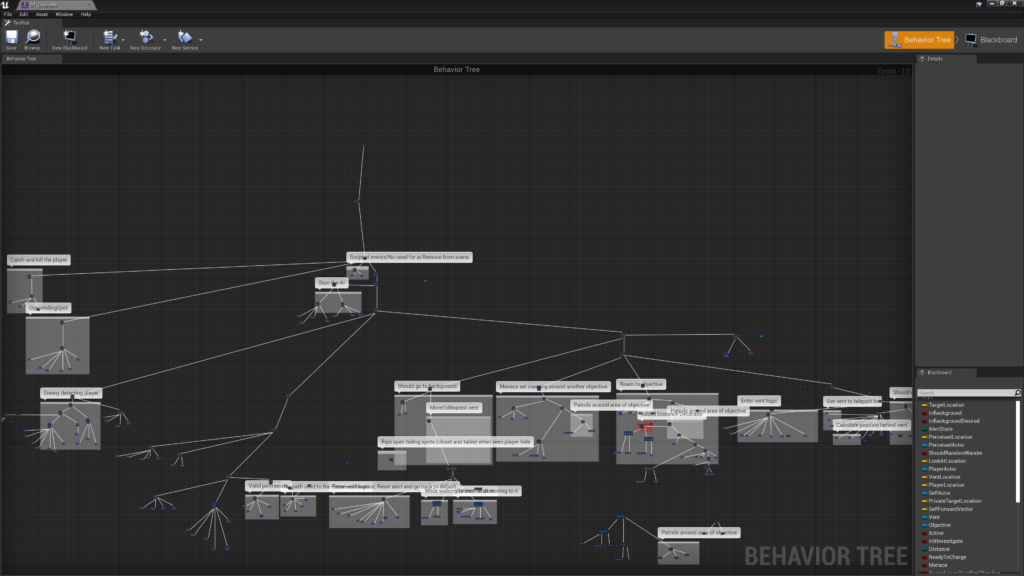

My work consisted of concepting, implementing and iterating the AI behaviour using blueprints, behaviour trees and EQS’s.

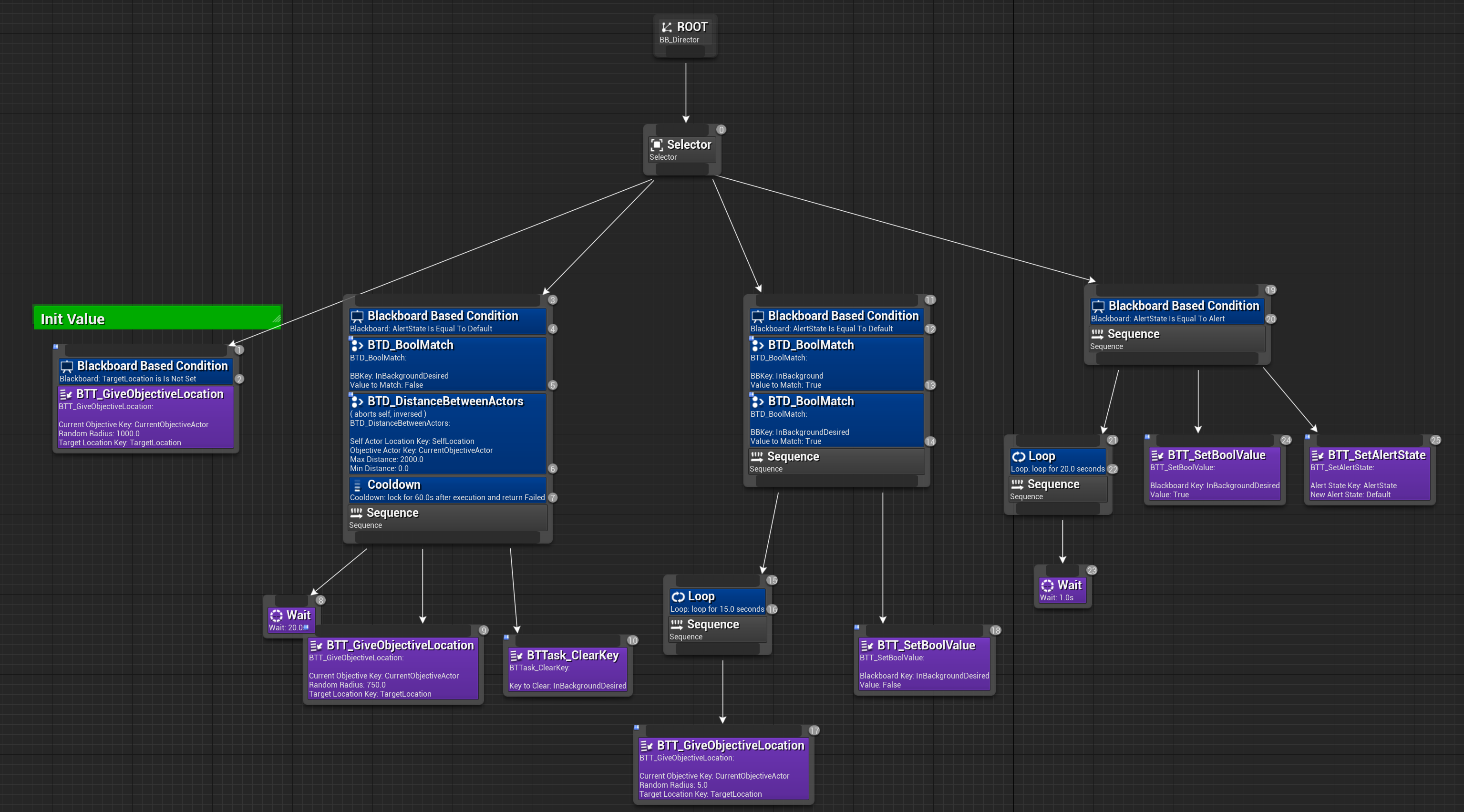

The default behaviour is mainly the AI roaming around the castle, unaware of any specifics about the player. Within the default state we can consider two sub-states for the AI in the foreground and in the background as shown in the movement section.

The primary behaviours in the default state are these:

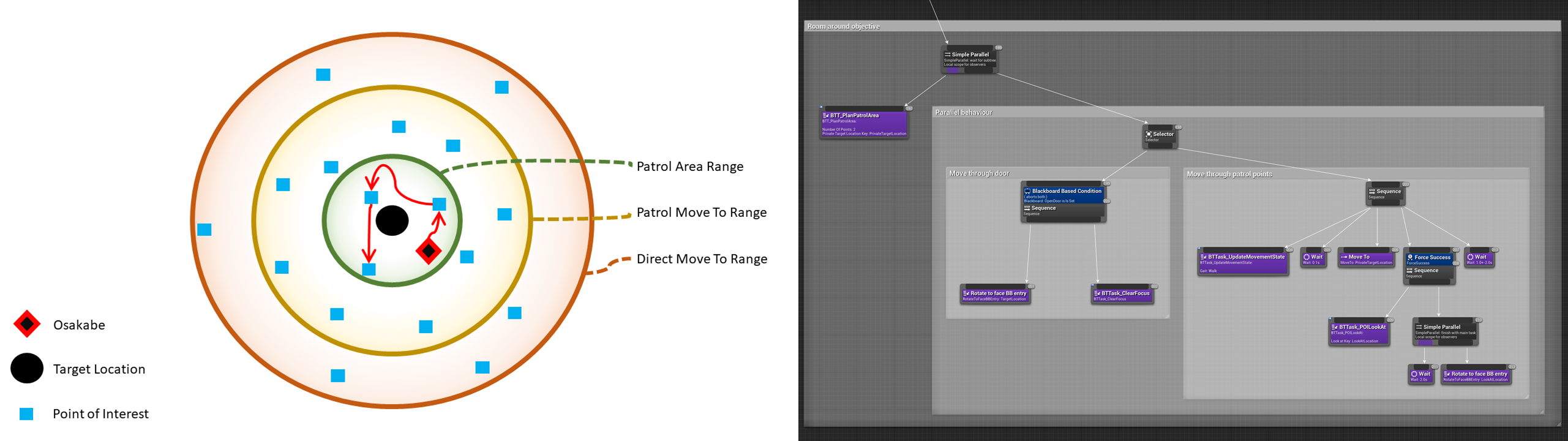

Move to objective

When away from the objective to roam the AI will move there. Based on the distance it can ether move there directly in case of a long distance on take a more interesting path through several ‘Points of Interests’.

Patrol an area

When near it’s objective the AI will patrol the surround area. We don’t want the AI to stand right next to the player’s objective so making it move around will create opportunities for the player!

Blueprint to plan patrol paths: (click ‘”Graph” if not able to interact)

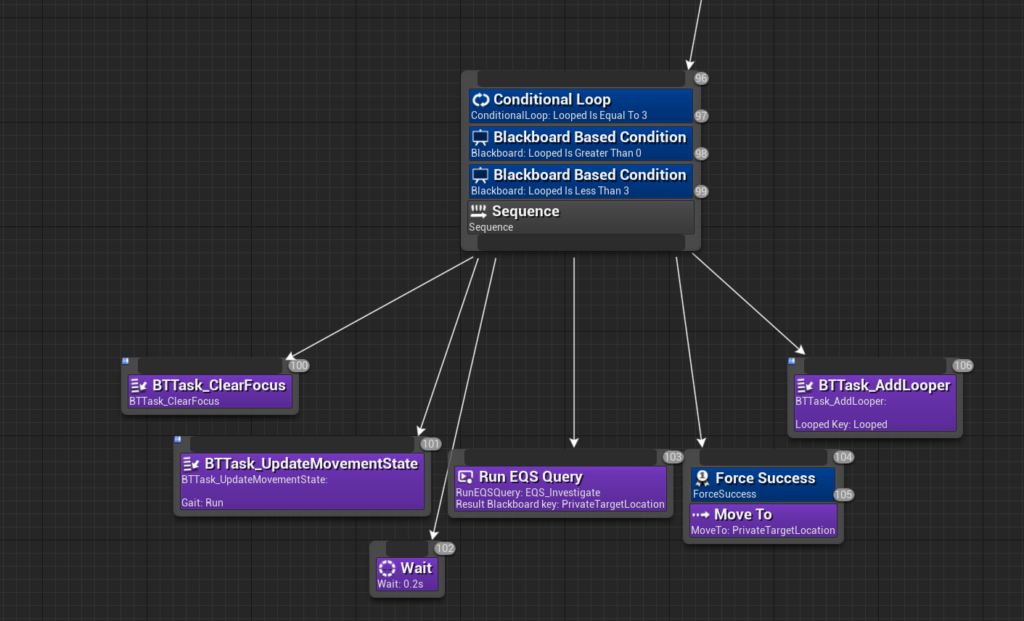

During implementation and iteration the behaviour during the AI’s ‘suspicious’ state was heavily simplified. It didn’t have the intensity of being chased by an alerted enemy and it limited the player’s ability to progress. I combination with the fact that the player at little tools that could be of help, most player just hid and waited.

For this reason the behaviour consists of the AI going to the source that triggered the state and will then to a quick lap of the area. The player can still fix a mistake or use it as a distraction during that time but the ‘downtime’ remains limited.

As this is a horror game without combat the alert state is the player’s last chance to escape. When alerted the AI will run towards the player and when in range, charge at them. If this connects it triggers the game-over state. The player’s only real chance is to quickly run away, use a tool and find a hiding spot. Of course, if the Osakabe sees them go in, it will drag them out and a game-over is still triggered. The gameplay here is purposefully limited, while there’s a possibility, it’s very likely the player will die after being seen. If the player could just get away easily then the fear would quickly be gone.

As we only have a single enemy and minimal Level Design control over the encounters I also set up a simple director AI.

This AI can manually steer the AI closer to the player with they haven’t been close in a while, or away in case of the AI staying too close for too long. With some more functionality this will allow us to have more control over the pacing.

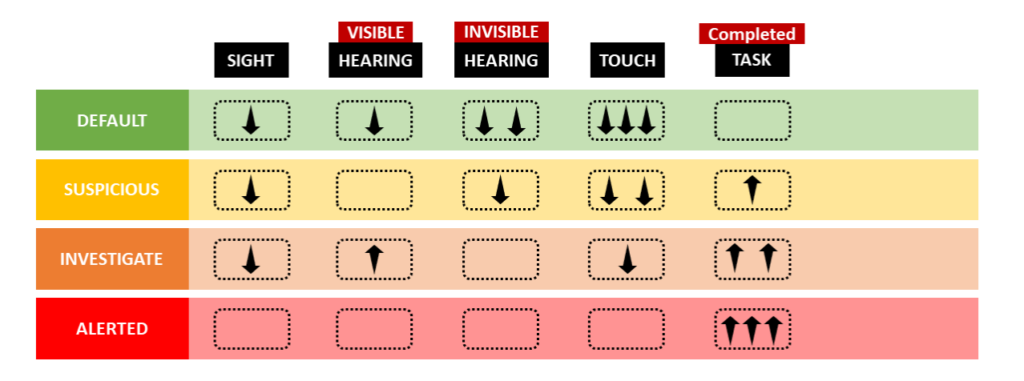

Perception

The development of the perception system was a tale of tight constraints. The original design used the Xenomorph as it’s main reference. Unfortunately the functionality needed to realise this design was poorly supported in Unreal’s perception system. To avoid a massive scope increase with custom C++ logic the design was simplified to fit Unreal’s existing systems.

The design intention for the perception was an intuitive system that doesn’t need UI to be readable as this would break the horror atmosphere. The player shouldn’t wonder whether they are just in or out of the AI’s vision, if they knew, the fear would be gone. This also meant the player had less info, therefore we can’t expect them to make similar decision compared to stealth games where view cones, detection state or radar (maps) are accessible to them, we had to be more forgiving in some areas.

My work consisted of concepting, implementing and iterating the AI’s perception with sight and hearing senses. These were implemented with blueprinting and used Unreal’s ‘AIPerception’ system.

The sight sense uses two viewcones, one for first seeing the player and a slighlty larger one for losing the player. This way the player won’t toggle in-and-out if they’re right on the edge of a single cone.

Once the player is seen a detection timer starts building up. Starting in the ‘default’ state it will go through ‘suspicious’ and ‘alerted’ once fully build up. This base rate at which this will happen is based on the distance to the source. The final rate can still have more modifiers to it, for example a Splinter Cell like system where the detection rate is further modified by the light level.

The distance modifier also made the systems intentionally more forgiving. As the game doesn’t reveal the exact view cone we don’t ‘punish’ the player as hard when they get seen from far away. This changed the stealth rule of being seen as bad to the closer you get, the bigger the risk. Which is a player decision that better fits the info the game gives them.

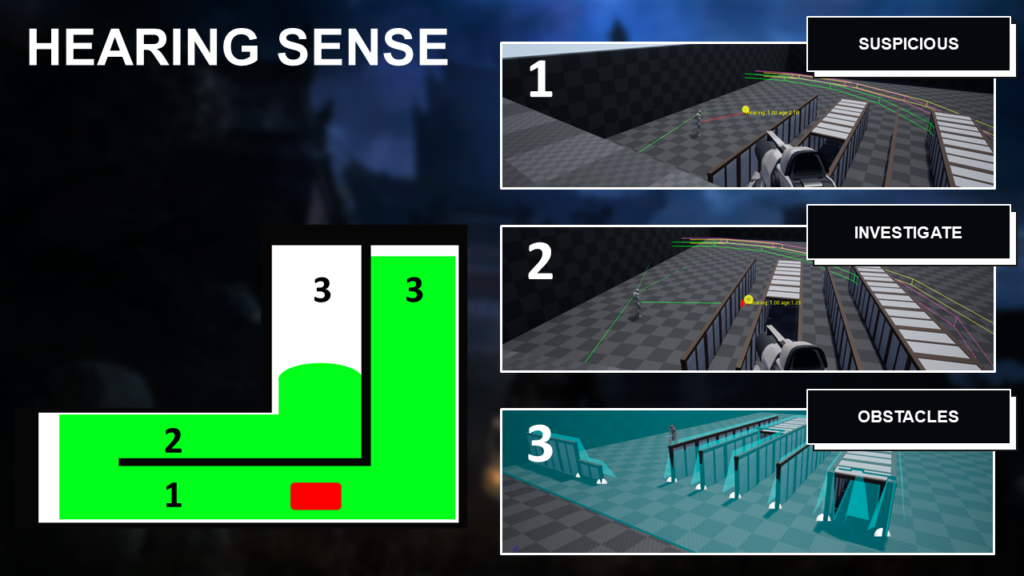

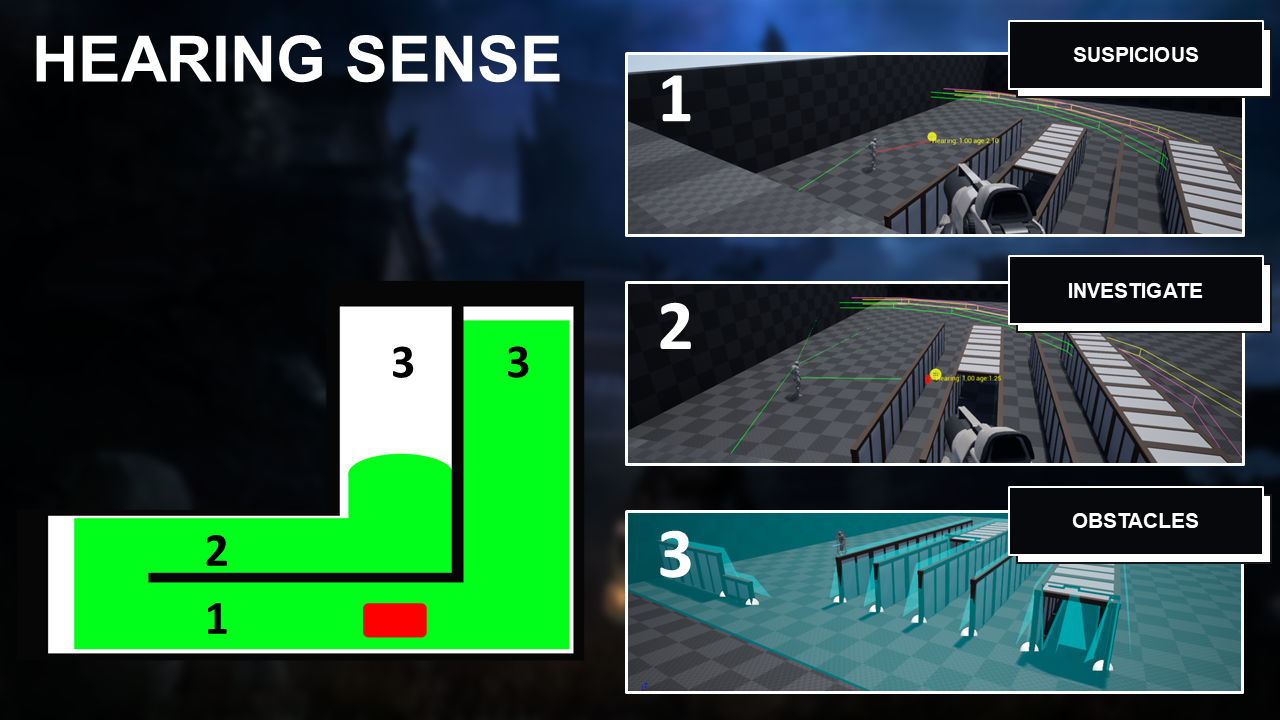

The hearing sense can be triggered by reporting a noise event, if in range it will alert the AI.

If a source has no visible obstruction the AI will get suspicious and just look over. A source that can’t be seen will be investigated by the AI.

While Unreal’s default system only uses pure distance I added a system to this to calculate the distance without being able to travel through walls. This was important as our castle had plenty of rooms that were close to each other in 3D space but not in walk distance. Therefore in this game the sound has their own navigations system which calculates the distance to travel over and around walls, instead of straight through them.

Movement

The main thing about the Osakabe’s movement is that it can move in between, what we call, background and foreground layers.

Foreground: Can be considered the play space, both the AI and the Player walk around here.

Background: The hidden pathways behind the castle’s wall. Only the AI can make use of these.

The design intentions for the movement were to populate the entire play space with a single enemy. We didn’t want the player to spot the AI and then fearlessly walk the other way. Nor did we want the player to make a tactical plan upon approaching the enemy only for it to become useless by the AI teleporting. The balance of info for player decisions yet keeping the fear (of the unknown).

My work consisted of concepting, implementing and iterating the movement systems. Which included the system just mentioned, AI state depending movement variables and tweaking the Nav-mesh generation to accommodate for a wide, tall, nine-armed AI moving through narrow castle corridors.

With this we can solve a lot of issues that come with only having a single enemy.

1. If the player sees the AI in room A they still feel tension entering room B as the AI could still show up there (before them).

2. Similarly, a lot of (horror) tension comes from over-anticipation, fearing an enemy behind every corner. If the player knows the AI is on the other end of the only connecting hallway that stress is gone as the they only have to anticipate a threat from one direction. Now a fear of the unknown is reintroduced as every sound the player doubts themselves if that was the AI who moved elsewhere.

While the ‘unknown’ is desirable, that does have it’s limits. The experience should still feel fair, the player doesn’t want the AI to come out a wall right behind them when hiding in the corner. Therefore the AI can only move through dedicated entrances. These are subtly marked and some feedback is also given when used by the AI. Not only does this keep it fair, it also makes the player feel like they have a to use skill to avoid these which would actually cause more stress then every wall being a threat they can’t counter.